Why I’m launching "Wouldn't it be cool?": A new climate series made with generative AI

It’s easy to feel depressed about climate change. We’ve blown past the Paris Agreement’s 1.5C target, storms are getting worse, and political commitment is... well, mixed at best.

But despite all appearances, there are plenty of reasons to believe that the future, while warmer, could also be pretty cool.

To explore this, I'm launching a new video series called "Wouldn't it be cool?" This project has two distinct missions that fuel each other:

- To find the hidden gems of real-world, optimistic climate solutions buried in the data.

- To openly experiment with how we tell these stories, using the latest in generative AI.

Mission 1: Finding Optimism in the Data

For the past decade, a part of my job was reading long reports that people spent months writing, reviewing, and running past the legal department, so that roughly 10 people could read them. It was my job to distill hundreds of pages into a short video that might steal people’s attention and, just maybe, move the needle on change.

When AI models became capable of reading and summarizing these documents, I wanted to take a stab at the big kahuna of reports that no one reads: The Intergovernmental Panel on Climate Change (IPCC) reports.

These reports are no joke. They run thousands of pages and are fought over by scientists and experts around the world. But deep inside the negotiated phrases and obscure jargon are countless hidden gems: real, tangible solutions and pathways to a future not yet realized.

This series is my attempt to find those gems and bring those possibilities to life.

Mission 2: Visualizing Climate Futures with GenAI

Just as important as the "what" is the "how." This series is also a vehicle to experiment with generative AI as a storytelling tool. We are still in a messy transition phase, but I truly believe generative AI has the potential to be transformative for communicating complex stories.

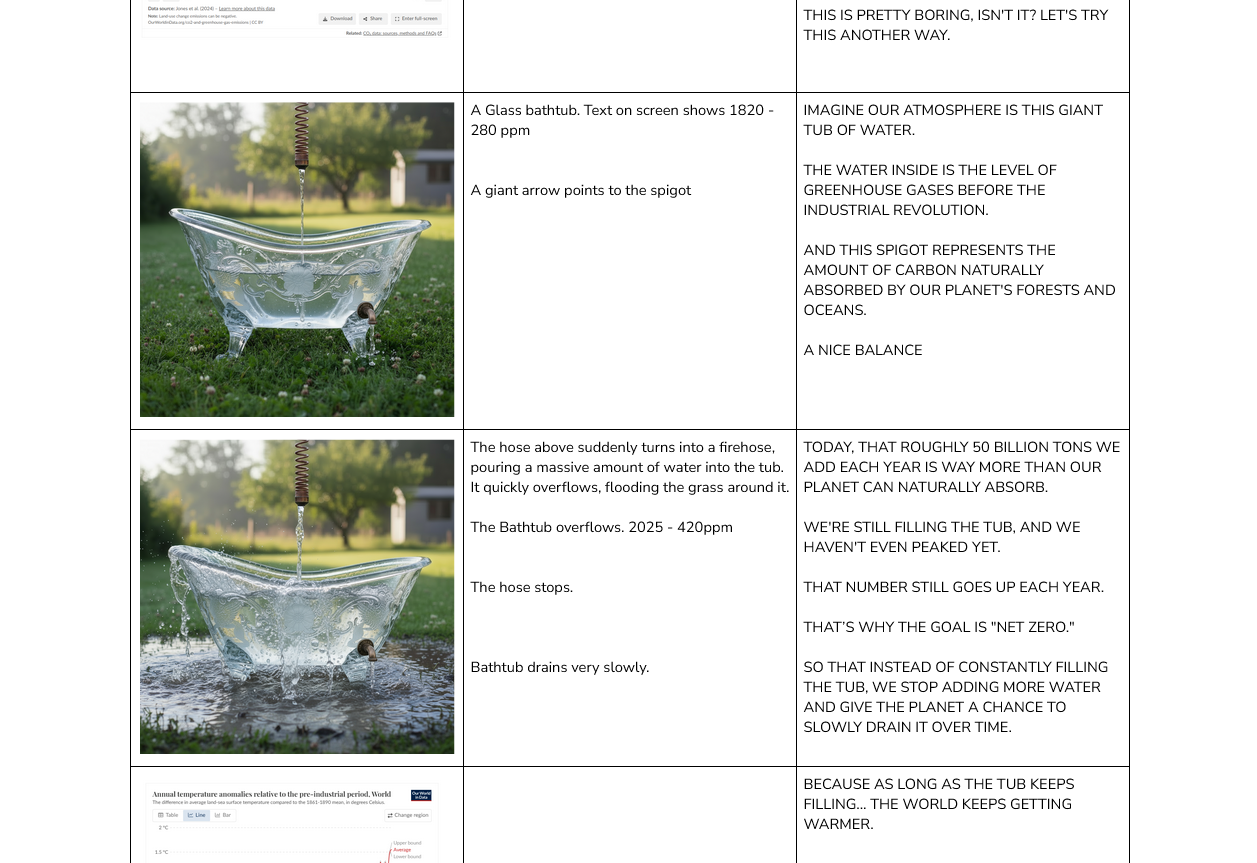

A perfect example came up in this first episode: I needed to explain "Net Zero."

The best metaphor is a bathtub: The water coming from the tap (our emissions) must be less than what's going down the drain (what our forests and oceans can naturally absorb).

In the past, budget constraints would have meant a simple 2D animation. Now, for roughly the same cost, GenAI gives me far more compelling options to visualize that idea.

Watch: Wouldn’t it be cool?

You can see the result in the first episode, which just launched on YouTube. It sets the stage for the series and explains "Net Zero" (with the help of that AI bathtub).

Please watch, leave a comment with your thoughts, and subscribe on YouTube for future episodes!

The "Making Of": My GenAI Workflow

For my fellow creators and the tech-curious, here’s a transparent look at the workflow I used to create this episode. The research has been ongoing, using tools like Notebook LLM to query the IPCC reports and other data.

Step 1 - Breaking story and building a board.

I write in a two-column format, with the script in one column and visuals in the other.

The big difference now is I keep an image generation tool open as I write. This lets me instantly test visual ideas, styles, and looks. These aren't final images, just quick ideas that might later become image references.

I always write the first draft myself (it's usually too long). I then use models like Claude or Gemini to help tighten the language and improve phrasing.

Step 2 - Asset Generation (The Animatic)

I start by generating all my key start and end frames. Freepik is my workhorse here, as their unlimited image generation removes "credit anxiety." I primarily use Google’s Nano Banana and Seedream 4k (for gritty realism).

Once I have the initial images, I use ComfyUI with Qwen Image Edit to mask and add/remove elements. Photoshop's generative fill is also excellent for removing distractions.

All completed frames are then upscaled to 4K using a workflow in ComfyUI (though I also use Magnifik in Freepik and will soon test Topaz’s photo tools).

Next, I record the main voiceover. For other audio: Music was a mix of Suno and the Adobe library. SFX and some of the other voices were generated with ElevenLabs.

I then create an Animatic (a rough cut with the 4k images and audio elements). This helps give a sense of timing that is useful for generating the video clips.

Step 3: Animation and Video Generation

For lip-sync dialogue, I use Google Veo 3.1 (via Freepik). For other shots, I use Kling 2.1 or Wan 2.2, depending on the motion or camera movement I need.

I then swap those clips in the animatic edit to get a final timing lock. Once that is set, I take the incorporated clips into Topaz Video and upscale them to 4k. Most of the motion graphics are simple animations done in After Effects.

The final assembly, color grade and audio mix are finalized in Premiere Pro using conventional techniques.

What’s next?

In future episodes, we will tackle the complex challenges facing energy production, agriculture, construction, transportation, and more. We'll focus on solutions that don't just reduce the carbon in our daily lives, but also make the future better than it is today. Wouldn’t that be cool?

Speaking of the future of AI and creativity, I'll be attending Upscale Conf. in Malaga next week. If you're going to be there and want to chat about any of this, hit reply and let's connect!